Unified Data and Analytics for your Business

Databricks is the market-leading Data Lakehouse product and a champion of all-purpose cloud-based Data Processing and Analytics platforms. Databricks provides a single point of contact for a wide variety of people from any data-driven organization: Domain experts and analysts to data engineers and data scientists. Unify all your organizations’ data into a single, centrally-governed, scalable storage space and turn it into re-usable, accessible data assets for reporting, planning and forecasting.

Databricks is the pioneer and namesake in the data lakehouse space. This architecture separates storage and compute resources, enabling both components to scale independently. Communication between storage and compute layers uses protocols and concepts that natively support parallelization and horizontal scaling. This way, much larger data volumes can be stored and processed much more efficiently than with traditional database systems.

Our NextLytics experts have singled-out Databricks as the most feature-complete and most harmonic full-spectrum cloud-based Data and Analytics platform in the market. Our customers leverage Databricks highly efficient data processing and integration capabilities to gain insight into their business, to gain competitive advantage, and to produce measurable value from data.

Webinar Recording: The Plug-and-Play Future of Data Platforms

Whitepaper: Databricks vs Fabric vs SAP BDC vs Dremio

Databricks in a Nutshell

Ensure Security, Governance, and Compliance with Unity Catalog

- Manage who has access to your data and keep track of all changes to your data structures - all under one roof

- Central, unified governance across Warehouse, Streaming, ETL, AI and Machine Learning applications, and Business Intelligence

![]()

Openness accelerates Innovation and Insights

- Databricks integrates leading open source tools with open data formats

- Delta Lake UniForm harmonizes data access across formats and protocols, e.g. Apache Iceberg, Apache Hudi

- Full, seamless interoperability with other major platforms like Snowflake, Google BigQuery, Amazon Redshift, and Microsoft Fabric

![]()

AI-powered Data Intelligence

- Databricks integrates context-aware AI assistance and enables faster workflows for technical and analytical tasks

- Best-in-class natural-language analytics and data visualization empowers non-technical business experts to leverage data as a competitive advantage

- The fully featured Databricks ML/AI development and operations framework is part of your regular Warehouse and BI-platform, offering everything your business needs under one roof

![]()

Optimize TCO with Transparent Pricing

- Databricks is billed on a consumption model: You only pay for the compute and storage resources that are actively used

- Optimized resource consumption: Automatically scaling computing units and on-demand serverless resources efficiently control consumption

![]()

Best in Class Data Lakehouse

- The Data Lakehouse architecture enables maximum performance and cost efficiency

- Continuous innovation: Databricks is run by the inventors of popular open source technology like Apache Spark, mlflow, Delta Lake and Unity Catalog

- Databricks in the Forrester Wave™ Data Lakehouses - Comparison (Q2 2024): named a winner and the leading platform among all well-known data platforms.

![]()

How Databricks Stacks Up Against Big Players like SAP Business Data Cloud and Microsoft Fabric

Databricks is the most feature-rich and innovative cloud data platform on the market. With the emergence of data lakehouse architecture, classic data warehouse technology is converging toward similar scalability and capabilities. In recent years, platforms have increasingly integrated tools for specific aspects of the data, analytics, and AI landscape. Today, several vendors offer complete solutions for nearly every possible scenario that companies might encounter when dealing with data:

- Microsoft Fabric

- SAP Business Data Cloud,

- Databricks

- Google BigQuery, Amazon Redshift from AWS, and Snowflake offer their native solutions and additional ecosystems.

In addition, Dremio and MotherDuck are carving out their niches in the emerging lakehouse market.

Weighting the feature scope and maturity of all these potential alternatives against one another is near impossible. We have compiled a brief cross-comparison between four major players into a high-level comparison matrix for you:

|

|

Business Data Cloud

|

Microsoft Fabric

|

|

|

|

Service Model |

Cloud Platform-as-a-Service |

Cloud Platform-as-a-Service |

Cloud Platform-as-a-Service |

Public/Private Cloud Software-as-a-Service (Dremio or any cloud provider) |

|

Feature Scope |

Data Lakehouse, |

Data Warehouse, |

Data Warehouse, Data Lakehouse, |

Data Lakehouse, Self-Service Analytics (SQL) |

|

Strengths |

Openness/Connectivity, cost-efficiency |

Enterprise integration (SAP) |

Enterprise integration (Microsoft) |

Openness/Connectivity, cost-efficiency |

|

Summary |

All-in-one platform with pro-code / low-code flavour |

All-in-one platform with low-code / no-code flavour |

All-in-one platform with low-code / no-code flavour |

Specialized platform with pro-code / low-code flavour |

Next-Gen Data Warehousing - your data ecosystem reimagined

Databricks combines state-of-the-art components and functions for data warehousing and data engineering in a single platform. Whether you are faced with large volumes of data, high velocity, unpredictable patterns, or frequently changing source schemas, Databricks offers the right tools to efficiently capture and harmonize your data. Building semantically rich, normalized data models for your warehouse becomes a breeze with efficient virtual layering on top of Delta Lake tables. It has never been easier to build a central, trusted data source for all your analytics needs—all on a single platform.

All data sources - one platform

Databricks simplifies the task of unifying all relevant data - whether it is historical batch data that needs to be imported from legacy systems or real-time streaming data flowing in from various sources.

By offering a single environment that centralizes data ingestion and management, Databricks eliminates the fragmentation often seen in multi-tool data pipelines and provides engineering teams with a reliable foundation for analytics, reporting, and machine learning projects.

Key features include:

‣ Autoloader

Monitor changes to an object store or file system source and automatically process any changed or new files in real-time.

‣ Lakeflow ETL

Intuitive graphical user interface for building data loading pipelines for various source systems.

‣ Lakehouse Connect

Integrate existing databases as plug-ins, including direct queries—as if they were another namespace in the Databricks Unity Catalog.

‣ Spark-JDBC

Use native Apache Spark job parallelization to transfer data to and from any remote JDBC-compatible system with maximum throughput.

Efficient use of scalable computing power for big data and analytics

Databricks uses Apache Spark as its underlying distributed computing engine to process large amounts of data and demanding workloads. The platform automatically scales resources to match the scope of your tasks. Thanks to this consumption-based pricing model, you only pay for the computations you actually use. This flexibility allows teams to process large data sets, perform complex transformations, and conduct advanced analytics without worrying about capacity constraints or unexpected costs.

Collaborative development without tool fragmentation

With Databricks, developers and data scientists can work together effectively in shared workspaces. The platform supports multiple programming languages, including Python, SQL, and R, making it the ideal environment for developing and maintaining data pipelines. Furthermore, built-in Git integration facilitates version control, encourages rigorous documentation practices, and enables teams to collaborate and track development progress in a transparent manner. Solutions can be developed, tested, and deployed directly on the platform via Jupyter Notebooks, allowing development teams to move seamlessly from prototyping to production without having to switch between different tools.

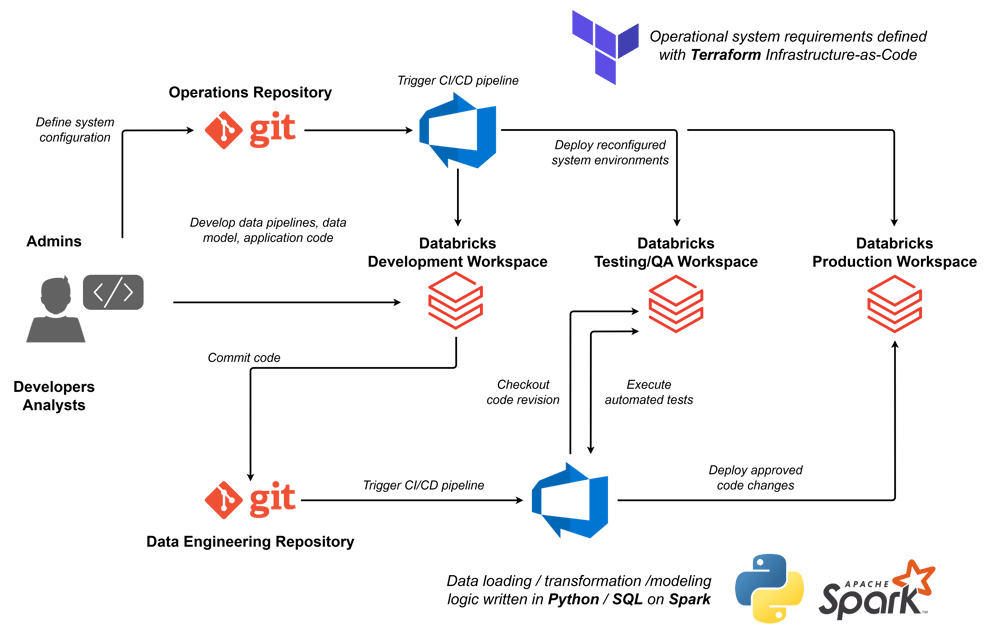

Databricks DevOps processes using Azure DevOps as an example: the Infrastructure-as-Code process is shown above the three Databricks system environments, which defines, checks, and deploys system components such as workspaces and authorization management, Spark clusters, Databricks jobs, etc. using Terraform. The development process for data processing in the Lakehouse is outlined in the lower half. SQL and Python Notebooks as well as central Python libraries are developed on the Databricks Dev-Workspace, versioned and synchronized with Git, automatically tested, and delivered via deployment pipeline.

Single-Source of Truth for Reliable Data Products

With Databricks you can create a fully-virtual real-time data model across your logical layer through Delta Live Tables. Follow Data Vault or Medallion architecture patterns and use industry-standard collaborative modeling tools like dbt to define the expected outcome - instead of dealing with the technical batch processing logic. Once you have established the centrally governed data model in Unity Catalog, you can track performance and change history throughout the entire landscape with built-in Delta Table history and data lineage features. AI-powered, human-readable annotations in the Unity Catalog make it easier for business experts to navigate the data model and gain insight through the self-service SQL query interface and BI dashboards.

Thinking BI and AI strategy together - with Databricks

Databricks is a mature and constantly evolving development platform for ML, deep learning, generative AI, retrieval augmented generation, agentic AI systems and other variants of current data science trends. Like no other software product, Databricks combines AI and BI into a true data intelligence workspace - for fast insights and competitive advantage.

Understand data at a glance - with intuitive dashboards from Databricks

Databricks dashboards make it easy to monitor key performance metrics in real time without needing extra software or complex setup. By providing a range of visualization options, data teams can quickly create easy-to-understand reports. This allows you to identify trends faster, share insights directly, and make informed decisions for your business.

Unlock Business Value with State-of-the-Art Machine Learning Workflows

The process of developing machine learning models, preparing the input data, fine-tuning and evaluating, and finally hosting the best model version for production use, is generally referred to as MLOps. The Databricks ML platform allows you to train, deploy, and manage machine learning models along this process within an integrated environment that directly accesses and processes your data. This close link between data and modeling allows you to gain insights based on the latest information. Intelligent automation and predictive analytics open up new opportunities and optimize workflows.

Instead of combining various different software products to fully support an end-to-end MLOps process, Databricks provides your Data Scientists with all the tools they need:

‣ Data access and feature engineering

Bring all your source data into Unity Catalog and use Jupyter Notebooks or Spark SQL to turn raw data into the optimized features for your ML model

‣ Feature Store

Fully defined features are stored in Unity Catalog using Delta Lake format and presented as dedicated Feature Tables for consumption in ML models

‣ Model Training

To develop and train new ML models, you can use the compute power of Apache Spark, any third-party Python libraries and machine learning frameworks like TensorFlow, XGBoost, Keras or Scikit-Learn. Keep track of versions, experiments, and performance during the development process with the fully integrated mlflow environment

‣ Model Tracking and Evaluation

Model versions are stored directly in Unity Catalog and full experiment and performance history for evaluation is tracked with mlflow

‣Model Serving

Production-ready models can be directly served from Unity Catalog under the central governance schema with mlflow via REST API, integrated in Spark SQL for ad-hoc inference in analytics queries, or turned into fully user-facing graphical applications with the Databricks Apps framework

Breaking new ground with agentic and generative AI

Databricks drives innovation through Agentic and Generative AI, providing access to LLM base models and AI integration within SQL queries. The Mosaic AI Agent Framework on Databricks is a comprehensive toolkit designed to facilitate the development, deployment, and evaluation of production-ready AI agents, such as Retrieval Augmented Generation (RAG) applications. This framework supports integration with third-party tools like LangChain and LlamaIndex, allowing developers to use their preferred frameworks while leveraging Databricks’ managed Unity Catalog and Agent Evaluation Framework.

The Mosaic AI Agent Framework offers a variety of features to streamline agent development. Developers can create and parameterize AI agents, build agent tools to extend LLM capabilities, trace agent interactions for debugging, and evaluate agent quality, cost, and latency. Additionally, the framework supports improving agent quality using DSPy, automating prompt engineering and fine-tuning. Finally, agents can be deployed to production with features like token streaming, request/response logging, and a built-in review app for user feedback.

Empower Decisionmakers with AI

With the Databricks Assistant, Databricks provides a powerful AI tool that leverages the power of large language models to assist users in a variety of ways using generative AI. This can be done by querying data in natural language, generating SQL or Python code, explaining complex code segments, or providing targeted help with troubleshooting.

The assistant which is based on the DatabricksIQ intelligence engine is deeply integrated in the Databricks platform and can provide personalized contextual responses regarding your data, code and parts of your configuration. More precisely, DatabricksIQ integrates information from your Unity Catalog, dashboards, notebooks, Data Pipelines and documentation to generate output fitting your business structure and workflows.

Realize Enterprise-Wide Impact with Databricks

The Strategic Role of Databricks in Modern Cloud and Data Strategies

Foster Enterprise-Wide Collaboration and Governance:

With built-in security, compliance, and collaboration tools, Databricks ensures every stakeholder - from data engineers to business analysts to domain experts - can work efficiently and securely in the cloud.

![]()

Accelerate Business Outcomes by Simplifying Your Data Infrastructure:

By combining the functionalities of data lakes and warehouses under one Lakehouse architecture, Databricks reduces total cost of ownership, cuts complexity, and aligns data strategies with broader business objectives.

![]()

SAP and Databricks: What is Business Data Cloud?

The SAP Business Data Cloud combines several previously independent products into a single full-spectrum cloud data and BI platform:

- Modern cloud data warehouse backed by HANA in-memory database

- Tightly integrated with classic SAP enterprise products like ERP, HR, or even SAP BW warehouses and BI solutions

- CitizenDeveloper Capabilities allow sharing data inside of an organization and empower business experts to build central data models

- Planning capabilities tightly coupled with data models from Datasphere or BW backend

SAP Databricks

- Mosaic AI and ML workspace

- Unity Catalog

- SQL Warehouse Query Engine

- development workspace & computing resources

At the beginning of 2025, a fully integrated Databricks workspace will become part of the SAP Business Data Cloud. Some of the core components of Databricks have been integrated into the SAP Business Data Cloud product suite:

- The Mosaic AI and ML workspace,

- The Unity Catalog of the lakehouse governance layer, and

- The SQL Warehouse query engine.

Also included are core Databricks features such as:

- Jupyter Notebooks as a development environment and

- Apache Spark and Photon Engine as scalable distributed computing resources.

SAP data models are seamlessly available in the Databricks catalog - and vice versa. This promises efficient, copy-free data integration between established SAP business domain data and third-party systems - or even unstructured data.

Technologically, this integration is based on Databricks' native data lakehouse innovations such as the Delta Lake table format and the Delta Sharing exchange protocol.

For companies that rely heavily on SAP and have already invested in the cloud-native Datasphere environment, SAP Databricks is a convenient solution. It offers an integrated alternative to a standalone Databricks solution.

Have a chat with our expert!

Would you like to know more about Data Science & Engineering?

Find interesting articles about this topic in our Blog

Enterprise Databricks vs. SAP Databricks: Strategic Decision Making

With SAP Databricks now firmly established as part of the SAP Business Data Cloud, many...

Enterprise Databricks vs. SAP Databricks: Everything you need to know

A year has passed since SAP unveiled its new product strategy for its data platform in February...

Advanced Data Warehousing in Databricks with DBT Macros and Snapshots

Today’s data landscape is a rapidly evolving field, in which companies are relying more and more on...

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)