Steering your business based on evidence and insight from data promises great competitive advantage and large potential for optimized and improved operations. To reap these benefits, the right data first needs to be collected and then properly submitted to the right analytical models, e.g. in predicting turnover or sales for future periods. Business Intelligence software like PowerBI or SAP Analytics Cloud can support simple prediction methods and planning but quickly run out of steam when use cases become more complex.

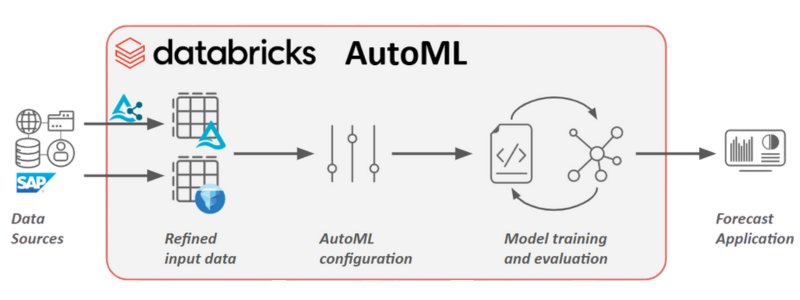

Databricks is a comprehensive Machine Learning, Data Science and AI-development platform that can be used to create innovative and highly precise prediction applications. In between the extremes, predictive analytics of moderate complexity can solve many business problems. “AutoML” seeks to cover this middle ground, giving accurate predictions without requiring a doctorate in higher mathematics. We showcase Databricks AutoML capabilities for time series forecasting to demonstrate how the platform can help turning business data into actionable insight with little to no programming effort.

Read our "Hands-on Databricks" Blog-Series: |

| 1. How to leverage SAP Databricks to integrate ServiceNow Data |

| 2. Data Modeling with dbt on Databricks: A Practical Guide |

| 3. Databricks AutoML for Time-Series: Fast, Reliable Sales Forecasting |

| 4. Advanced Data Warehousing in Databricks with DBT Macros and Snapshots |

Databricks AutoML

Databricks brings data engineering, analytics, and machine learning together on the Lakehouse - unifying scalable compute, reliable storage, and governed collaboration. In a previous article on Databricks & MLflow, we tracked experiments, versioned models, and streamlined deployment. We’ll build on that foundation here: starting from a simple sales dataset, we’ll first visualize trend and seasonality, then let Databricks AutoML explore strong forecasting candidates. AutoML not only trains and tunes models at scale, it also generates transparent notebooks and registers results with MLflow, so you can reproduce, customize, and promote the best model into production.

AutoML highlights we’ll leverage:

- Automated time-series featurization

Auto-generated lags, moving averages, holiday signals, and calendar features to capture trend and seasonality without manual engineering. - Model search + tuning, out of the box

A plethora of models like Prophet, ARIMA/SARIMA, gradient-boosted regressors, and deep learners (where available), with scalable hyperparameter optimization. - Built-for-humans notebooks

Auto-generated, readable notebooks showing data prep, feature creation, training code, and evaluation—so you can audit, customize, and rerun. - Robust evaluation & backtesting

Rolling-window validation, forecast plots, error metrics (MAPE, RMSE), and baseline comparisons to ensure lift over naïve models. - One-click operationalization

Seamless MLflow tracking, model registry versioning, and straightforward promotion to batch scoring or real-time Model Serving.

Databricks & AutoML end-to-end example

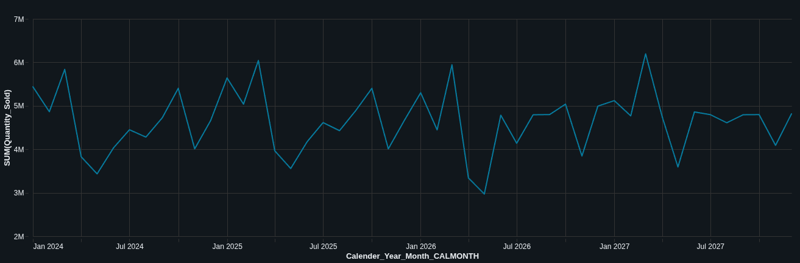

Our goal is to forecast total monthly sales of a retail business. We start by visualizing the sales dataset in order to identify trends, patterns and seasonality.

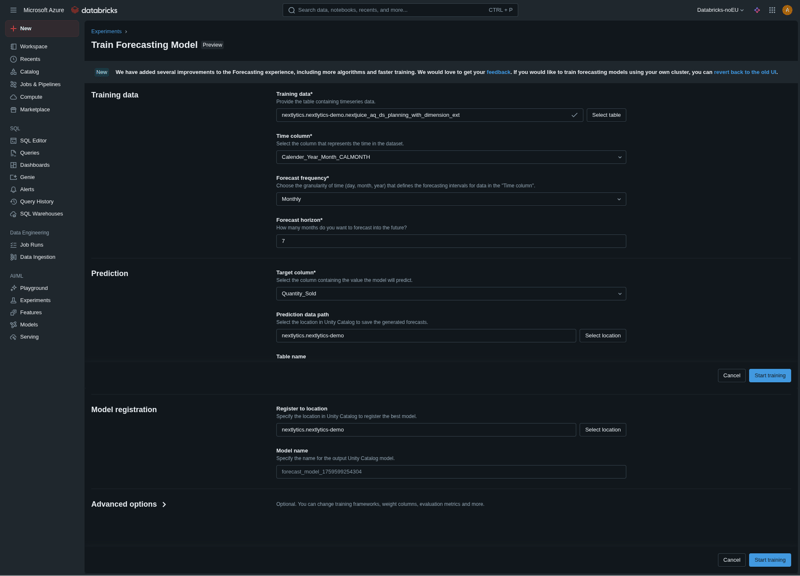

There is a clear pattern in the dataset: sales peak around March and dip in the months right after, followed by a slow recovery the rest of the year. We can now proceed with the forecasting models training and optimization. We can define the experiment parameters like the time and target column, forecast horizon and storage locations for model registration and predictions.

Watch the recording of our Webinar: "Bridging Business and Analytics: The Plug-and-Play Future of Data Platforms"

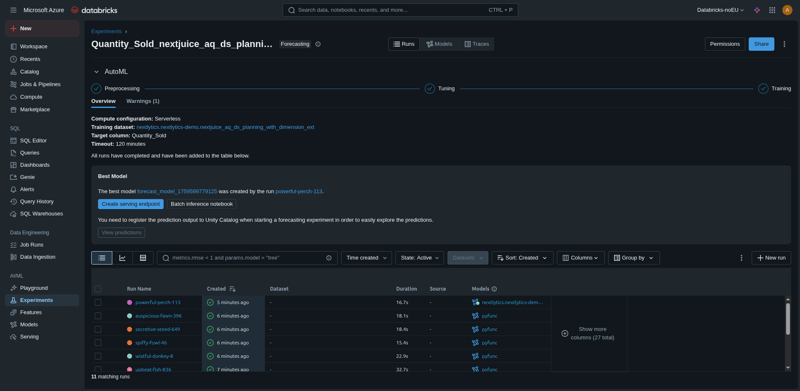

Now we are ready to start the experiment and let AutoML do its tricks. Data preprocessing, model tuning and training will be completed in a matter of minutes without user intervention and the experiment runs will be available for further inspection. Moreover, the best model will be stored in Unity Catalog, ready for deployment and serving.

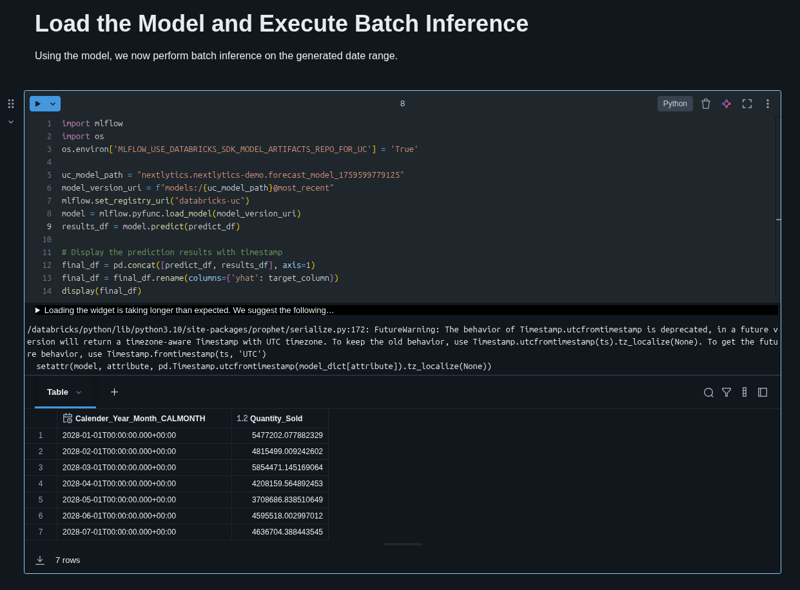

Several time series prediction models and algorithms are explored, scored and optimized in order to acquire the most accurate predictions based on metrics like MAPE (mean absolute percentage error), RMSE (root mean square error) and others. A Python notebook will be automatically generated, letting us execute the model and make predictions for future periods.

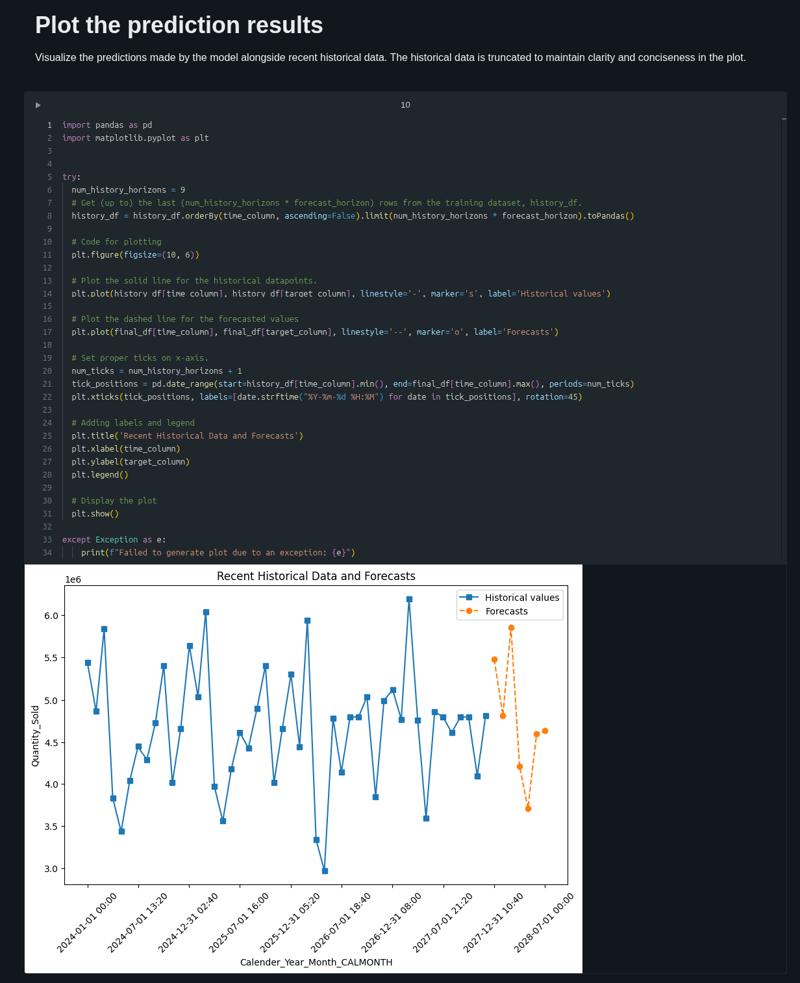

The optimal model is loaded and predictions for the next seven months are generated. We can get a glimpse of the future by looking at the plot generated by AutoML which indicates that the forecast is quite accurate and close to the actual figures, capturing the trends and patterns found in the historical data.

The sales forecast accurately predicted a significant spring sales surge, followed by a sudden decline. This dip was then succeeded by a moderate, gradual recovery. This demonstrates the model's ability to capture both cyclical and transient market dynamics, offering valuable insights for business planning.

AutoML in SAP Business Data Cloud

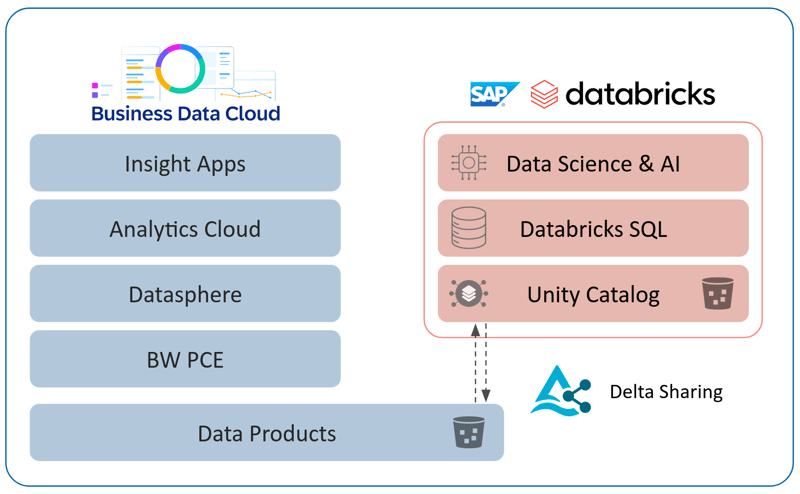

SAP systems are wide spread among organizations and one of the most prolific sources of operational and business data. Running advanced analytics outside of the cloud ecosystem is always possible but may require third-party tooling or custom coding to bring data from SAP to the analytical environment and back. SAP Business Data Cloud integrates the SAP Databricks workspace for scenarios as the above AutoML time-series forecast. Data products can now be shared between SAP Datasphere and SAP Databricks without replication. SAP Databricks can be used to run AutoML or custom machine learning applications. Results can then be shared back into the SAP analytics ecosystem or to outside BI tools like Microsoft Fabric or PowerBI.

SAP systems rely heavily on low-code/no-code user interfaces. While this is convenient for less technically-minded personnel, functionality is always limited in comparison to a pro-code system like Databricks. With the AutoML features we have demonstrated above, SAP Databricks bridges this gap between user experience philosophies and encourages experimentation and innovation on reliable business data straight from the primary operational source systems.

Databricks AutoML: Our Conclusion

Databricks AutoML makes time-series forecasting practical, transparent, and production-ready in one sweep: it automates smart featurization and model search, generates human-readable notebooks you can audit and adapt, evaluates rigorously with rolling backtests and metrics like MAPE/RMSE, and registers the winner for seamless serving via MLflow/Unity Catalog.

In our sales example, that end-to-end flow not only surfaced an accurate model but also captured the expected peak, post-peak dip, and gradual recovery - evidence that you can move from exploration to reliable forecasts without hand-crafting every step. If you need fast, trustworthy sales predictions that you can explain and deploy, AutoML on the Lakehouse is a compelling default.

Do you have questions about this or another topic? Our experts are here to help - get a free consultation by getting in touch with us!

FAQ - Databricks AutoML

These are some of the most frequently asked questions about Databricks AutoML.

Data Science & Engineering, Databricks

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)