The integration of systems is an interesting and necessary field that no modern IT landscape can do without. At a time when digital processes are gaining the upper hand and the degree of automation and the amount of data are continuously increasing, the number of IT systems is also growing. These systems can be linked unilaterally or bilaterally, depending on whether one system controls the other or whether reciprocal interaction enables important use cases.

The integration of proprietary systems with open-source solutions is particularly interesting. The advantages of both system variants are combined in integrative application scenarios, with connectors. Thus, the workflows of the open-source workflow management platform Apache Airflow and the process chains in the SAP Business Warehouse (BW) can be optimally combined and form a bridge between the SAP ecosystem and the open-source landscape.

In this blog article we will show you which use cases support a combination of Apache Airflow and the process chains in SAP and which implementation ideas led to our Airflow-BW connectors.

Workflow management in two systems

Both Apache Airflow and the process chains in SAP BW provide automation of data processes. However, the respective application purpose and approach are different. Before the effect of the system combination can be considered, we first show you the individual strengths.

SAP BW acts as a hub for enterprise-wide data. The reliable supply of data and a high-performance framework for analyses and reports are among the main purposes of the system. Here, the process chains simplify the operation of the BW by automating recurring steps in the ETL area. The process chains themselves are created with a visual interface and scheduled, executed and monitored on the BW side.

The Apache Airflow workflow management platform provides an open-source solution for scalable system orchestration with excellent customizability for a wide range of use cases. Workflows are defined using the simple syntax of the Python programming language and draw on many predefined operators that launch and monitor Spark jobs, Python scripts and machine learning workflow. The community behind Airflow delivers professional features such as a rich web interface and a full-featured API, ensuring that the open-source system remains competitive.

While Apache Airflow is increasingly used when other open-source systems need to be coordinated, SAP BW and its process chains are important due to their centrality in everyday business. In the next step, use cases are considered that call for a combination of the workflows in Airflow and the BW process chains.

Possible use cases

- Control and integration of a machine learning stack

If machine learning models are to be productively integrated into everyday business, the training, evaluation and, depending on the design, also the prediction must be mapped and versioned via workflows. The numerous Airflow operators are ideal for this purpose. For example, training can be triggered via BW after current data has been loaded.

- Data manipulation with Python, shell scripts and many more

In order to use available developer capacities optimally, an expansion of the usable programming languages for data manipulation and process representation is helpful. Scripts of any kind can be started via Airflow. After data processing has been completed, a process chain in BW can take over the final steps of data processing.

- Free use of open-source libraries

Open-source solutions are more powerful and stable than ever. Third-party systems can be connected indirectly via the interface to Airflow were connection details can be stored encrypted.

- Use of the powerful BW infrastructure

For performance reasons, using the BW infrastructure can be a gain for data workflows in Airflow. Extensive ETL processes are moved to BW and relieve the Airflow workers.

Effective workflow management with

Apache Airflow 2.0

Implementation of two-sided system integration

The above mentioned use cases clearly show that a two-sided integration of the systems is desirable. Existing connection points can be used for this purpose:

- Starting workflows in Airflow from the BW side

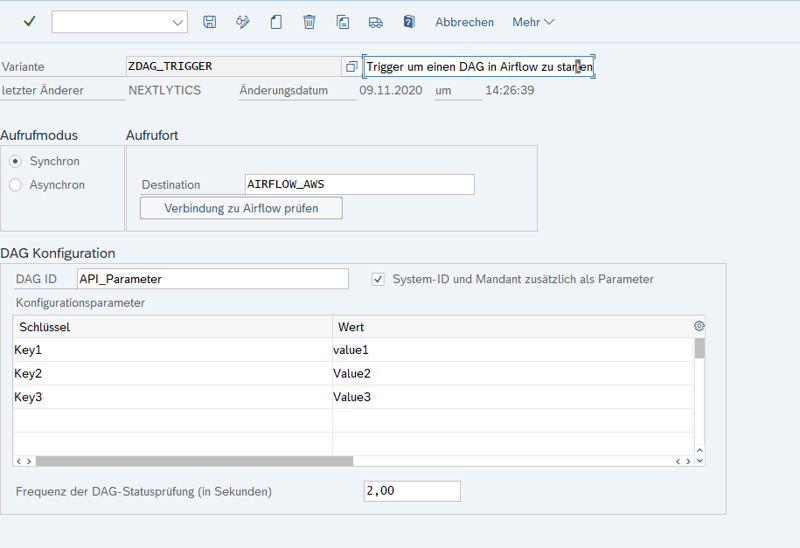

In SAP BW, a REST client can be created for the implementation, which starts selected workflows as needed via Airflow API and passes parameters required for the execution.

- Starting process chains in BW from Airflow side

HTTP requests via a Python script or conveniently via a modification of the HTTP operator can be used to connect to the BW. An endpoint must be created in BW to start the process chain.

However, the mere triggering of process chains and workflows does not yet describe a proper integration scenario. The following aspects are relevant in the business context:

- Option of a synchronized execution of the subsequent steps in the trigger process after successful target process

- Tracking of the status of the started processes

- Monitoring and error handling

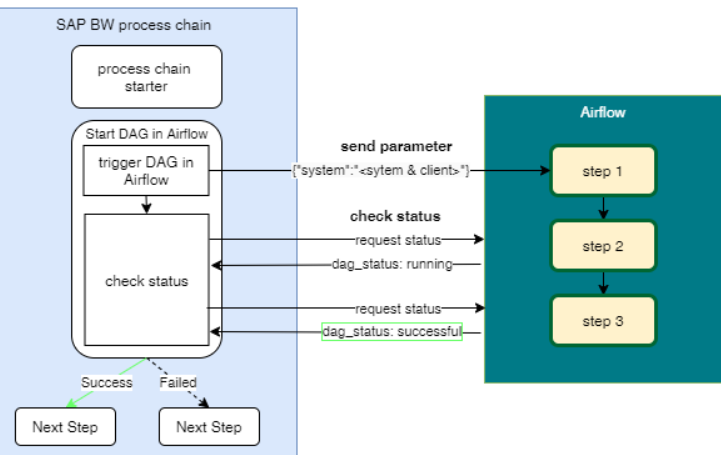

Based on these basic ideas, we at NextLytics have developed an SAP BW connector that implements the above requirements. The process of triggering a workflow using an SAP BW process chain is shown schematically in the following figure.

Example of triggering from SAP BW with synchronous process tracking

In the BW process, the status of the workflow run and the log outputs of the tasks can be tracked simultaneously. For example, a conditional file upload can be started that only transfers files with changes to the database.

The connection to the Airflow instance can be checked via a connection test and the transfer of parameters (e.g. target system) is easily possible via corresponding fields.

For triggering BW processes with Airflow, the development of a separate operator for a user-friendly interface is a good idea. The logic for synchronous execution is thus encapsulated centrally and is not distributed across many different workflow definitions.

Do you have any questions or are you interested in the connector? Please do not hesitate to contact us. NextLytics is always at your side as an experienced project partner. We help you to effectively solve your data problems from data integration to the use of machine learning models.

SAP Connector, Apache Airflow, SAP BW

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)