The business world communicates, thrives and operates in the form of data. The new life essence that connects tomorrow with today must be masterfully kept in motion. This is where state-of-the-art workflow management provides a helping hand. Digital processes are executed, various systems are orchestrated and data processing is automated. In this article, we will show you how all this can be done comfortably with the open-source workflow management platform Apache Airflow. Here you will find important functionalities, components and the most important terms explained for a trouble-free start.

Why implement digital workflow management?

The manual execution of workflows and pure startup with cron jobs is no longer up to date. Many companies are therefore looking for a cron alternative. As soon as digital tasks - or entire processes - are to be executed repetitively and reliably, an automated solution is needed. In addition to the pure execution of work steps, other aspects are important:

- Troubleshooting

The true greatness of a workflow management platform becomes apparent when unforeseen errors occur. In addition to notification and detailed localization of errors in the process, automatic documentation is also part of the process. Ideally, a retry should be initiated automatically after a given time window, so that short-term system reachability problems are resolved on their own. Task-specific system logs should be available to the user for quick troubleshooting. - Flexibility in the design of the workflow

The modern challenges of workflow management go beyond hard-coded workflows. To allow workflows to adapt dynamically to the current execution interval, for example, the execution context should be callable via variables at the time of execution. Concepts such as conditions are also enjoying increasing user benefit in the design of flexible workflows. - Monitoring execution times

A workflow management system is a central point, which tracks not only the status but also the execution time of the workflows. Execution times can be monitored automatically by means of service level agreements (SLA). Unexpectedly long execution times due to an unusually large amount of data are thus detected and can optionally trigger a notification.

Out of the challenges, Airflow was developed in 2014 as AirBnB's internal workflow management platform to successfully manage the complex, numerous workflows. Apache Airflow was open-source from the beginning and is now available to users free of charge under the Apache License.

Apache Airflow Features

Since Airflow became a top-level project of the Apache Software Foundation in 2019, the contributing community got a gigantic growth boost. As a result, the feature set has grown a lot over time, with regular releases to meet the current heartfelt needs of users.

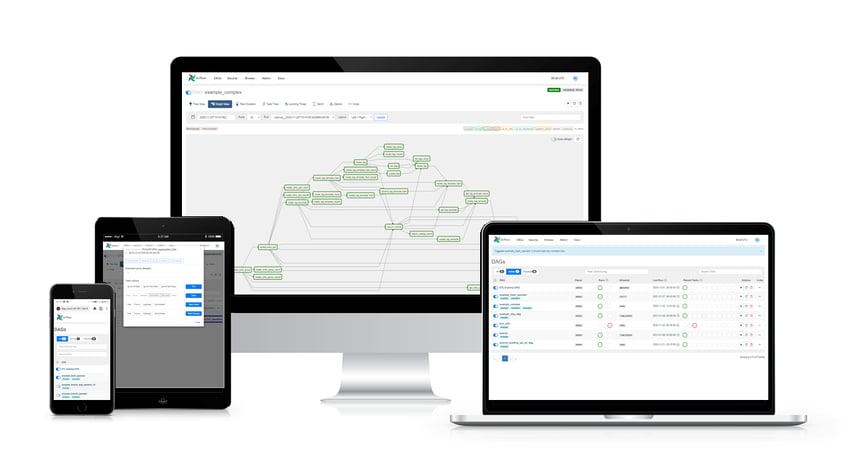

Rich web interface

Compared to other workflow management platforms, the rich web interface is particularly impressive. The status of execution processes, resulting runtimes and, of course, log files are directly accessible via the elegantly designed web interface. Important functions for managing workflows, such as starting, pausing and deleting a workflow, can be realized directly from the start menu without any detours. This ensures intuitive usability, even without any programming knowledge. Access is best via a desktop, but is also possible via mobile devices with comfort restrictions.

Command line interface and API

Apache Airflow is not only available for clicking. For technical users there is a command line interface (CLI) which also covers the main functions. Through the redesigned REST API, even other systems access Airflow with secure authentication through the interface. This enables a number of new use cases and system integrations.

Realization of complex workflows with internal and external dependencies

In Apache Airflow, workflows are defined by Python code. The order of tasks can be easily customized. Predecessors, successors and parallel tasks can be defined. In addition to these internal dependencies, external dependencies can also be implemented. For example, it is possible to wait with the continuation of the workflow until a file appears on a cloud storage or an SQL statement provides a valid result. Advanced functions, such as the reuse of workflow parts (TaskGroups) and conditional branching, delight even demanding users.

Scalability and containerization

As it is deployed, Apache Airflow can initially run on a single server and then scale horizontally as tasks grow. Deployment on distributed systems is mature and different architecture variants (Kubernetes, Celery, Dask) are supported.

Customizability with plug-ins and macros

Many integrations to Apache Hive, Hadoop Distributed File System (HDFS), Amazon S3, etc. are provided in the default installation. Others can be added through custom task classes. Due to its open-source nature, even the core of the application is customizable and the community provides well-documented plug-ins for most requirements.

Optimize your workflow management

with Apache Airflow

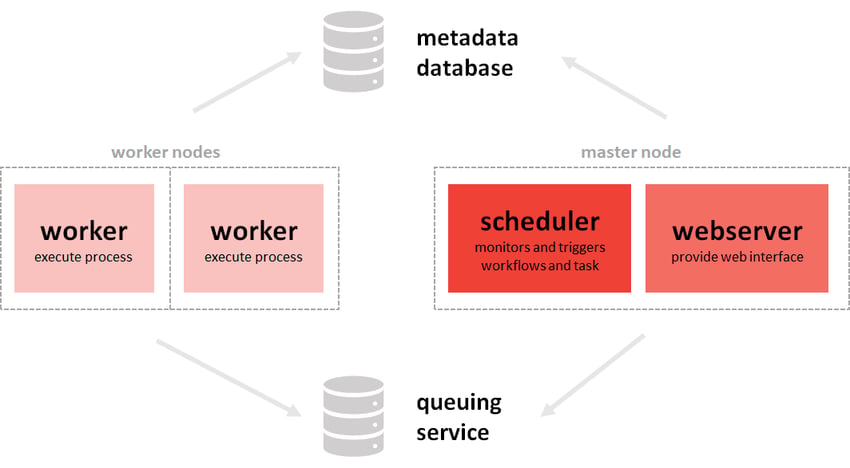

Components in Apache Airflow

The many functions of Airflow are determined by the perfect interaction of its components. The architecture can vary depending on the application. It is thus possible to scale flexibly from a single machine to an entire cluster. The graphic shows a multi-node architecture with several machines.

The picture shows a multi-node architecture of Airflow. Compared to a single node architecture, the workers are placed in their own nodes

- A scheduler along with the attached executor takes care of tracking and triggering the stored workflows. While the scheduler keeps track of which task can be executed next, the executor takes care of the selection of the worker and the following communication. Since Apache Airflow 2.0 it is possible to use multiple schedulers. For particularly large numbers of tasks, this reduces latency.

- As soon as a workflow is started, a worker takes over the execution of the stored commands. For special requirements regarding RAM and GPU etc., workers with specific environments can be selected.

- The web server allows easy user interaction in a graphical interface. This component runs separately. If required, the web server can be omitted, but the monitoring functions are very popular in everyday business.

- Among other things, the metadata database securely stores statistics about workflow runs and connection data to external databases.

With this setup, Airflow is able to reliably execute its data processes. In combination with the Python programming language, it is now easy to determine what should run in the workflow and how. Before creating the first workflows, you should have heard certain terms.

Important terminology in Apache Airflow

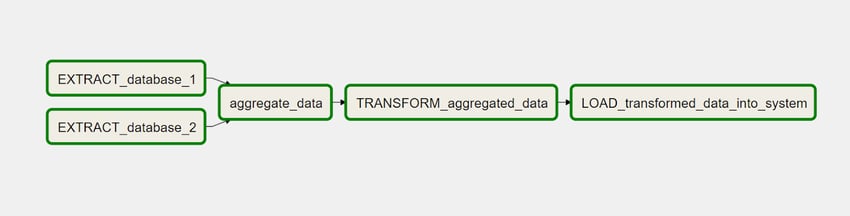

The term DAG (Directed Acyclic Graph) is often used in connection with Apache Airflow. This is the internal storage form of a workflow. The term DAG is used synonymously to workflow and is probably the most central term in Airflow. Accordingly, a DAG run denotes a workflow run and the workflow files are stored in the DAG bag. The following graphic shows such a DAG. This schematically describes a simple Extract-Transform-Load (ETL) workflow.

With Python, associated tasks are combined into a DAG. This DAG serves programmatically as a container to keep the tasks, their order and information about the execution (interval, start time, retries in case of errors,..) together. With the definition of the relations (predecessor, successor, parallel) even complex workflows are modelable. There can be several start and end items. Only cycles are not allowed. Even conditional branching is possible.

In the DAG tasks can be formulated either as operators or as sensors. While operators execute the actual commands, a sensor interrupts the execution until a certain event occurs. Both basic types are specialized for specific applications in numerous community developments. Plug-and-play operators are essential for easy integration with Amazon Web Service, Google Cloud Platform, and Microsoft Azure, among many others. The specialization goes from the simple BashOperator for executing Bash commands to the GoogleCloudStorageToBigQueryOperator. The long list of available operators can be seen in the Github repository.

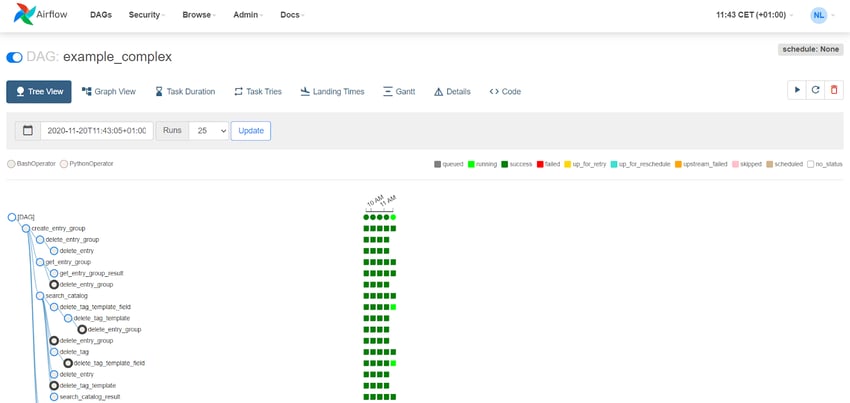

In the web interface, the DAGs are represented graphically. In the graph view (upper figure) the tasks and their relationships are clearly visible. The status colors of the edges symbolize the state of the task in the selected workflow run. In the tree view (following graphic), past runs are also displayed. Here, too, the intuitive color scheme indicates possible errors directly at the associated task. With just two clicks, the log files can be conveniently read out. Monitoring and troubleshooting were definitely among Airflow's strengths.

Whether machine learning workflow or ETL process, a look at Airflow is always worthwhile. Feel free to contact us if you need support with the custom-fit configuration or want to upgrade an existing installation. We are also happy to share our knowledge in hands-on workshops.

Data Science & Engineering, Apache Airflow

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)