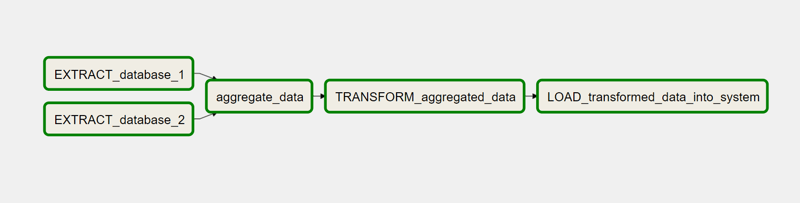

Running ETL workflows with Apache Airflow means relying on state-of-the-art workflow management. It orchestrates recurring processes that organize, manage and move their data between systems.

The workflow management platform is free to use under the Apache License and can be individually modified and extended. Even in the installation without plug-ins there is a lot of potential. Get inspired by the possibilities in this article!

Example ETL workflow with the steps Extract, Aggregate Transform, Load into Airflow

Apache Airflow

The top-level project of the Apache Software Foundation has already inspired many companies. The extremely scalable solution makes the platform suitable for any size of company, from startups to large corporations.

Workflows are defined, planned and executed with simple Python codes. Even complex data pipelines with numerous internal dependencies between tasks in the workflow are defined quickly and robustly. Because the challenges of data engineering don't end there, Airflow brings a rich command line interface, an extensive web interface and, since the new major release Apache-Airflow 2.0, an expanded REST API. Numerous functions are available that significantly simplify monitoring and troubleshooting. Depending on requirements, Airflow can be extended with numerous plug-ins, macros and user-defined classes.

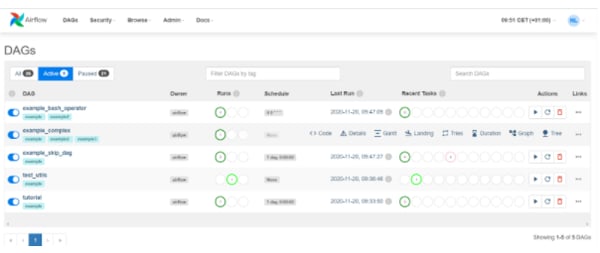

The status of a workflow's runs and the associated log files are just a click away. Important metadata such as the interval and the time of the last run are visible via the main view. While Apache Airflow ETL workflows independently performs, you are well informed about the current status. Nevertheless, looking into the web interface is not mandatory, as Airflow optionally sends a notification via email or Slack in case of a failed attempt.

The application spectrum focuses on the ETL area, whereby Apache Airflow machine learning workflows optimally orchestrate as well.

Apache Airflow web interface. The status of the workflow runs is visible on the left (Runs).

Apache Airflow web interface. The status of the workflow runs is visible on the left (Runs).

The status of the tasks of the last workflow is visible on the right (Recent Tasks).

In the second workflow (example_complex) the context menu is activated.

To get a better overview of the possible use cases of Apache Airflow, we have summarized various use cases for you here. In addition to classic application ideas, you will find inspiration on how to enrich your data pipelines with state-of-the-art features.

- Classic

- Ambitious

- (Im)possible

Classic

The basic idea of workflow management systems is buried in the classic use cases: Tasks - especially in the ETL area - should be executed reliably and on time. External and internal dependencies are to be taken into account before a task is started.

An external dependency is, for example, a file to be processed that is provided by the business department. If this is not yet available, processing cannot begin. The internal dependencies describe the conditional sequence of the tasks among each other.

- Cron alternative

As soon as tasks such as cleaning a database, sending newsletters or evaluating log files are to be executed regularly, cron jobs are often used. If these tasks are taken over in Airflow instead, one keeps the overview despite many tasks and benefits from the traceability of the status information.

- Conditional, modular workflows

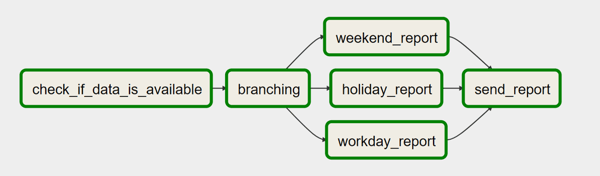

With Airflow, complicated dependencies are easily realized. Integrated concepts such as branching and TaskGroups allow complex workflows to be created in a maintenance-friendly manner. Branching enables conditional execution of multiple alternatives, and the use of TaskGroups makes smaller parts of the workflow reusable.

Example use case for branching in Airflow. Depending on the day (working day, holiday, weekend) different reports should be generated.

- Processing of data directly after generation

When several systems work together, waiting situations often arise. For example, a Bash job is to be started directly after a file has been submitted. However, the exact time of delivery is unknown. With the task class of sensors Airflow pauses the workflow until this file exists. The same principle can also be applied to daily updated tables or views in a database.

Optimize your workflow management

with Apache Airflow

Ambitious

The more workflows run on Airflow, the faster ideas for special use cases emerge. As an experienced user, you want to go beyond Apache Airflow best practices and take full advantage of its capabilities. Some advanced use cases require only a configuration file customization. Others are implemented with custom plugins and task types (operators) or macros. Overall, there are many ways to customize Airflow to meet emerging needs.

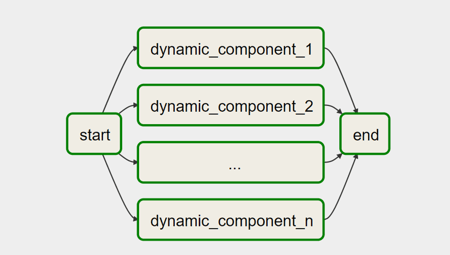

- Flexible workflows

Although many aspects of the workflow are predefined in the Python files, some flexibility can be built into some areas. During runtime, context variables such as the execution date are available. This can be used in SQL statements, for example. Using the CLI, API or the Web GUI, it is possible to create additional (encrypted) variables. This can be used, for example, to keep file paths flexible for an upload. Last but not least, even the workflow structure itself can be kept dynamic. The number of task instances, for example for a variable number of entries in a database, can be set via variables. In the graphic the components dynamic_component_# are created via a variable.

Creation of dynamic components with Airflow

- Big Data - scaling horizontally with Airflow

Horizontally scaled, Airflow can process up to 10,000 automated tasks per day. In the process, several terabytes flow through the data pipelines every day. For example, Spark transformations and data pulls from the data warehouse are managed by Airflow in the Big Data space. With Airflow, execution errors and inconsistencies are reliably detected and a reaction can be realized as quickly as possible.

For example, if a data schema changes unannounced or the data volume is increased due to special circumstances, the result is visible in the monitoring and notification of the responsible persons can be automated.

- REST API

As a special feature, Airflow includes an extensive REST API. This can be used to get status information about the workflow runs, to pause or start workflows and to store variables and connection information in the backend. When activating a workflow via interface, context variables can optionally be passed. Authentication ensures security here.

This means that Airflow can also be linked to SAP BW via the REST API.

(Im)possible

To assess which application ideas are possible and which are not, the limitations of Airflow should also be considered. At its core, Airflow is a large-scale batch scheduler that is mainly used to orchestrate third-party systems. In the basic idea the workflows are static. The following functions are therefore only realized in a roundabout way.

- Workflows without fixes schedules

The execution of a workflow at several arbitrary dates cannot be set in motion without further effort. The web interface and the CLI do not offer any function for this. Workarounds are provided by the REST API or by manually starting the workflow at the desired time via the web interface.

- Data exchange between workflows

One of the main applications is the design of data pipelines. Ironically, these are not natively supported by Airflow, as no data is passed between tasks. With XCOM, at least metadata is exchangeable between tasks. Here, for example, the location of data in the cloud can be passed to a subsequent task. The maximum size of the metadata is currently limited to the size of a BINARY LARGE OBJECT (BLOB) in the metadata database.

The mentioned points are often reasons for custom plug-ins and macros to extend the functionality of Airflow. To date, however, Airflow has proven to be a reliable and well-designed workflow management platform that solves many of the challenges of modern data engineering. Linked to Anaconda Environments, Airflow is also ideally suited for training machine learning models.

For more information on Airflow and what's new in Apache Airflow 2.0, take a look at our whitepaper. If you need support in realizing your application ideas, do not hesitate to contact us. We will be happy to enrich your project with extensive practical experience!

Data Science & Engineering, Apache Airflow

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)