The Apache Airflow Summit is the highlight of the year for the community of developers, contributors, and users of the popular workflow orchestration platform. The 2022 edition of the Summit was held from May 23rd to May 27th as a global hybrid conference with local sessions in 13 different cities around the globe, 53 sessions of presentations and discussions, and boasting an audience of almost 8000 individuals following along in the online conference interface. Our NextLytics consultants joined the conference to look out for the newest developments and trending topics and have consolidated their observations into this conference summary for you!

Apache Airflow Summit conference welcome screen (image source: airflowsummit.org/)

Clustering Airflow Summit Sessions

With 53 sessions spanning from 30 to 60 minutes and no officially announced conference tracks to help navigating, it can be challenging to find the most interesting presentations and discussions. Following along many of the live sessions and re-watching almost all sessions from the 5 day Summit, we have derived 4 clusters of major topics addressed:

- Apache Airflow core developments and new features

- Apache Airflow operations and monitoring

- Apache Airflow application examples and best practices

- Apache Airflow Machine Learning

If you don’t have the opportunity or resources to check out the massive amount of recorded content from the Summit yourself, we present you a quick rundown of the most important developments in the following sections. Read to the very end for an additional glance at the most promising future features of Airflow that were hinted at during the conference!

Main topics of the Apache Airflow Summit 2022 conference

Airflow Core Features

Considering Airflow 2.3 had only been released on April 30th, 2022, one of the main topics of the Summit was obviously going to be the showcasing of new features and recap of some of the internal workings of the newest release.

A major development in Airflow 2.3 has been the introduction of proper “Dynamic DAG” functionality. Whereas before all best practice examples for dynamically creating tasks during DAG runtime have been introduced with the customary phrase “we know this is NOT good practice”, there now is one! As part of the Airflow core, any type of Operator can be multiplied (“expanded”) by iterating through contents of Python lists or dictionaries. No more custom helper-code required.

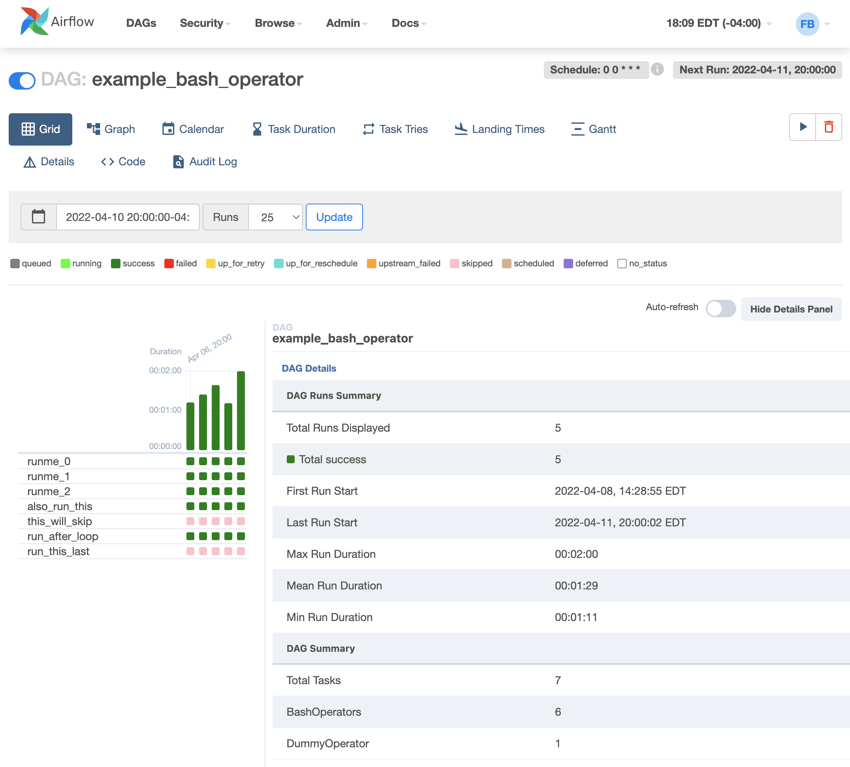

The new Grid View component in the user interface is a highly visible new feature. Displaying all tasks and their individual executions and state in a compact form, this is a significant step forward from previously available visualizations. Expanded tasks created by the new dynamic DAG features are displayed in grouped form and are intuitively accessible in this view. The additional Details panel allows users to quickly check in-depth information about a DAG to further improve the overall user experience of the web interface.

Screenshot of the Grid View display component introduced in Airflow 2.3 (image source: airflow.apache.org)

More of a hidden but important architectural development is the introduction of “deferrable” Operators. To achieve this, a new component called Triggerer has been introduced into the Airflow core to handle all asynchronous jobs, i.e. jobs that wait for an external condition for longer periods of time instead of actively processing anything themselves. This interface is a logical successor to the experimental “Smart Sensors” concept which enabled resource-efficient scanning for trigger conditions via a grouping of sensor activities. Performance improvements of up to 90% less compute resources spent are reported by the developers. The implementation is as easy as using the asynchronous type of available operators, which will be implemented bit by bit by the Airflow community.

For more information about the latest features in Airflow, check out the official release blog post or contact us to discuss the potential of upgrading your Airflow system with regard to your specific requirements and environment!

Optimize your workflow management with Apache Airflow!

Airflow Operation and Monitoring

Another large part of sessions focused on how to operate Airflow in different (cloud) environments and especially how to address the challenges arising from having a growing number of workflows or instances running in production: monitoring and (data) observability.

As Apache Airflow reaches a mature state, focus shifts from introducing sparkling new features to optimizing day-to-day operations for many teams. This shift has been clearly visible at the 2022 conference. Software-as-a-Service providers shared their insights into running many Airflow environments efficiently on Kubernetes clusters and/or cloud infrastructure. While some of this knowledge may be applicable in smaller scale operations, the more prevalent and omnipresent hot topic has been how to monitor Airflow, its overall performance, and specifically the success state of the business logic packages into DAGs.

With Apache Airflow ETL operations in mind, the question of how to reduce “data downtime” has been discussed in multiple sessions. Slightly different definitions of the concept have been presented but in general it refers to problems arising from errors somewhere in the data pipeline that is orchestrated by Airflow as the central component. Robust system operation based on DevOps principles and automation are one part of the solution. Check out our previous article about how to create a robust system using GitLab CI/CD if you want to know more about this. The second and still more challenging part is to monitor data flows and data quality across workflows. Airflow is a great tool to ensure robust execution of DAGs but needs extension to enable the monitoring of success or failure of the actual business logic implemented.

This is where the concept of data lineage and the OpenLineage specification come into play. Data lineage information is metadata about the origin and processing steps taken to create a certain piece of information, for example a value in a data warehouse table. Lineage is expected to enable a boost in efficiency for tracing problems through complex data ecosystems as well as communicating meaning and responsibility attached to datasets and data products. The goal of OpenLineage is to become the communication standard for reporting and checking data lineage metadata across all systems contributing to data pipelines. Current developments aim to provide out-of-the-box compatibility for Airflow and data processing DAGs and tasks, and some examples have been shown at the Summit. While this integration effort is making progress, it is still a complex procedure that requires deep understanding of both Airflow internals and the tools used for the integration.

Currently, there is no single best way to implement data observability and companies like Monte Carlo, Databand, or Acryl Data (with their open source project DataHub) actively push their services with promises of low-effort plug-and-play solutions.

Airflow Best Practices and Applications

Presentations from many vendors and conference sponsors about how to best use Airflow in different scenarios and in combination with different third party solutions from the data ecosystems are another traditionally strong theme of the Summit.

Among the many presentations on these topics, using CI/CD tools to automate Airflow operations and DAG development processes has been a frequent recommendation. Details of how to implement such DevOps principles for Airflow paralleled our own collection of best practices. Other best practices for workflow and data flow implementation included the application of containerized process steps using the DockerOperator or KubernetesOperator, the use of (automated) testing regimes, and utilization of features like Pools and Service Level Agreements. We have covered some of these Apache Airflow best practices in the NextLytics blog before and will highlight others in more detail in the near future.

One interesting observation for customers of Airflow SaaS offerings is that access to the Airflow API has been one of the most sought after features in multiple sessions. Chances are that SaaS providers like AWS are prioritizing work on enabling full use of the REST API for customers to allow for deep integration with other systems like SAP BW process chains and services.

Airflow for Machine Learning

Last but not least, and very much in line with how we often deploy Airflow for our customers, aspects of using the orchestration platform for Machine Learning applications and operation was a major topic in 2022.

Airflow has shaped up to be one of the most popular orchestration tools in the Machine Learning operations (MLOps) landscape. Scalability, extensibility, and richness in features make Airflow a great choice for managing ML workflows - a view shared by many leading companies from the tech industry as contributions to this Summit have again demonstrated.

Major reasons why Airflow is considered perfect for orchestrating MLOps workflows mentioned in presentations and discussions at this conference were:

- DAG execution is very robust and gives many options to recover from failed states and/or to re-run tasks as necessary

- DAGs can freely integrate with many different systems through the many available Operators, allowing teams to choose the best tools and ML frameworks for their specific needs

- Recurring steps in the ML pipeline can be easily containerized and run using Docker or Kubernetes Operators, introducing security features and version control into the process

- Airflow can be integrated with third party system through use of the REST API, again enabling for more advanced integration with the existing system context

Apache Airflow is a popular solution for orchestrating Machine Learning development and operations frameworks.

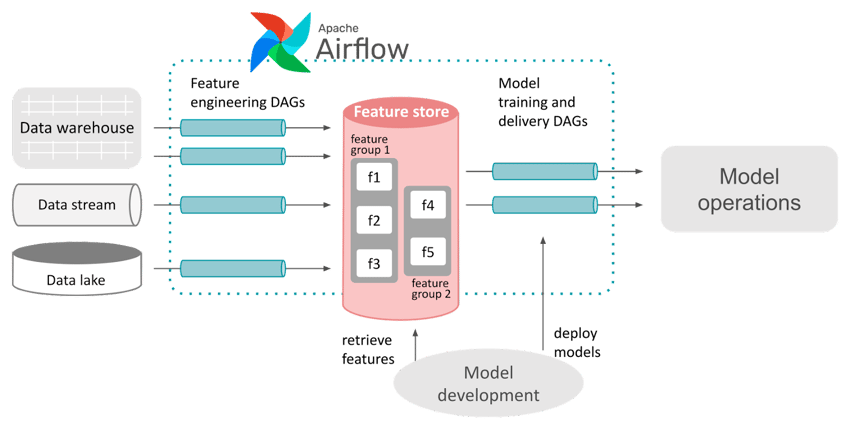

The diagram shows a schematic of how Airflow can be used to manage ML workflows around a Feature Store.

Airflow Machine Learning best practices displayed at the Summit 2022 also revolved around the emerging components like feature stores and model registries to create user-friendly end-to-end ML platforms.

The (near) Future of Airflow

With so many improvements presented, best practices shared, and ideas sparked - what more can be expected from Apache Airflow in the near future? To conclude our first recap of the Airflow Summit 2022, here are some of the features that have been announced for coming releases:

The ability to have multi-event triggers based on the deferrable operator interface will further enhance the design possibilities for DAGs. A native DAG versioning interface will provide a reliable way to migrate through changes in different environments, make custom versioning workarounds obsolete, and allow for more robust deployments. Data-driven scheduling and data-aware DAG execution will introduce the ability to actively refer to Dataset objects in DAGs and use these (and changes on them) to create cross-DAG dependencies. And finally, the Airflow web interface will soon see a complete relaunch as a modern React-based web application to lift user experience to a whole new level.

We already can’t wait to witness the next releases of Airflow and the inspiring community exchange about new trends and topics at next year's Airflow Summit! In the meantime, we would be happy to hear about your teams’ challenges and collaborate on creating efficient solutions by making use of Airflow’s broad capabilities.

Data Science & Engineering, Apache Airflow

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)