Delta loading is a crucial feature of enterprise Data Warehouses (DWH) which ensures continuous and stable performance while regularly replicating data from source systems into the DWH and ensuring minimal impact on all involved systems. This is commonly achieved by leveraging a mechanism which can determine the minimum required rows of source data to be transferred to the DWH to guarantee the target model to be up-to-date. The concrete implementation of this mechanism varies greatly between various DWH solutions even within the SAP product landscape.

In this article we would like to showcase what we have found during our client engagements and internal testing as to the extent and functionality of delta loading capabilities that SAP Datasphere [formerly known as SAP Data Warehouse Cloud (DWC)] offers and their corresponding limitations. While the delta capabilities of Datasphere are indeed still quite restricted compared to SAP Business Warehouse, we have found two approaches that work in a variety of use cases.

Setup

In this scenario we have examined an S/4HANA OnPrem system which was set up as a trial instance via the SAP Cloud Application Library (CAL) and serves as a data source for our internal Datasphere tenant. We have established a connection between S/4HANA and Datasphere as well as configured a Data Provisioning Agent to facilitate Remote Tables and the Real-Time-Replication functionality.

Our sample Architecture consists of S/4HANA Change Data Capture (CDC) enabled Core Data Service (CDS) Views, which are accessed as Remote Tables in Datasphere and are acting as the staging layer.

The inclined reader might enjoy reading more about the way CDC in CDS Views works at:

CDS based data extraction – Part II Delta Handling | SAP Blogs

At this point however it should be sufficient to note that CDC allows the target system to recognize which rows have been changed in the source object since it has last been replicated.

Approaches

We have determined two major design approaches to implement delta loading in an enterprise scenario using Datasphere:

Real-Time-Replication on Remote Tables

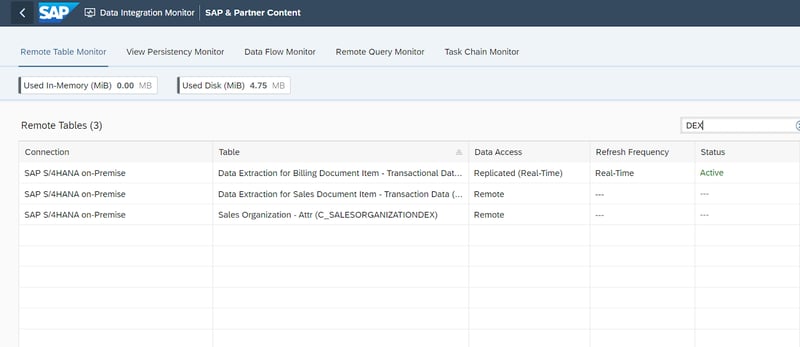

The easiest way to implement delta loading is to leverage CDC enabled CDS Views by importing these as Remote Tables in Datasphere and enabling Real-Time access in the Data Integration Monitor for them.

This will ensure that the Remote Table in Datasphere is a near real-time updated copy of the CDS View in the source. While this is a very simple, quick and effective approach, it also introduces some limitations.

a) The refresh frequency cannot be adjusted

While there are signs that this feature might be introduced by SAP in the midterm, it is currently not possible to adjust the frequency of the replication, meaning it will occur near real-time. While this is not an issue for most scenarios, clients that are used to nightly upload cycles will find that a change management concept will be necessary, since source system changes will be present almost instantly in the DWH instead of being available on the next business day only.

b) The Remote Table is always a 1-1 copy of the source

Since we are replicating a Remote Table and a Remote Table is just a copy of the metadata of the source object, we cannot adjust it in Datasphere, as we might be used to, by e.g. creating additional fields. Adjustments in subsequently used Views are still possible of course.

Watch the recording of our webinar:

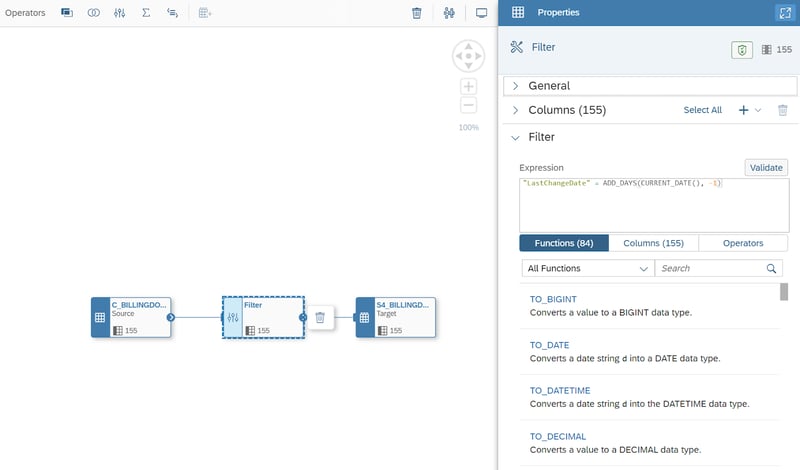

Data Flows with filter on suitable delta indicator

This approach necessitates finding a suitable field in the source object which can be used as a delta indicator. Ideally the source needs to have something like a LastChangeDate field, recording the datetime as a new record is created, a record is deleted, or an existing one is changed. For the deletion scenario a binary field like DeletionFlag would be required additionally. If these requirements are met, we can follow the approach in Datasphere by creating a Data Flow object, inserting a Remote Table, a local Target Table and a filter, limiting the Data Flow to e.g. LastChangeDate = CurrentDate - 1. Afterwards we would schedule this Data Flow to run every night. This way each nightly run will always apply a filter to fetch only those records that were modified on the day before.

This approach will work for inserts and updates, if you can guarantee that all those changes are reflected in a field like LastChangeDate, but the deletion scenario would only be solved if one of the following applies:

a) Deletions in the source are not possibleIn an ERP source system many potential source objects could already be set up in such a way, that they will never delete any record.

b) Deletions in source are done via deletion flagIf deletions do happen in the source object, then it is necessary that it is not done via an actual deletion of the row, but by setting an indicator field like DeletionFlag instead.

c) Deletions in source don’t need to be deleted in DWHEven if deletions do happen in the source object and they are done via actual deletion of the row, then if atleast the requirements of the Datsphere model does not necessitate a deletion in the Data Warehouse to reflect the source, then the deletion problem is solved. Edge cases still have to be considered like e.g. creation and deletion on the same business day would never appear in the DWH model.

If none of the above apply, or even if there is no LastChangeDate-esque field to handle inserts/updates, it is still possible to implement a pseudo delta by doing some SQL based source/target comparisons to determine which records are modified and should therefore be transferred. Unfortunately this approach has far greater performance impact since we have to fetch additional data from the source to facilitate this comparison. If all else fails, this can be used as a last resort.

Delta Loading - Our Conclusion

We have shown two major approaches to implementing a delta mechanism in Datasphere. These are suitable to cover some of the most common scenarios. The approach of activating Real-Time-Replication on Remote Tables is the most convenient solution in integration scenarios where it is available and where the limitations are a non issue. Other cases can be mostly covered with the Data Flow approach, as long as the described limitations are considered.

It should be mentioned that there are other possible solutions that we explored, including but not limited to some which would require the inclusion of additional software components like SAP Landscape Transformation Replication Server (SLT) or SAP Data Intelligence. However unless your company already utilizes these software components, we would suggest going with a pure Datasphere approach so as to avoid overengineering.

While Datasphere continues to be developed and supplied with additional features we hope to have more freedom in the setup of these approaches so that we may cover more complex scenarios while maintaining the current clever architecture that preserves a minimal performance impact.

Do you have questions about this or other topics? Are you trying to build up the necessary know-how in your department or do you need support with a specific question? We are happy to help you. Request a non-binding consulting offer today.

SAP Data Warehouse, Datasphere

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)