In the current age of lightning fast digitization, the need for organizations to keep the pace with the changing landscape is paramount. A robust orchestration engine capable of seamlessly managing complex workflows, automating tasks, and ensuring flawless execution is the foundation to help you face the challenges of data integration and analysis. Shaped by a vibrant and active open source community, Apache Airflow has stood the test of time as one of the leading technologies in its sector, while still steadily evolving and improving, both on existing functionalities as well as widening its feature scope. Which recent additions to the list of Apache Airflow features will help your team reach its goals and improve processes? We give you a shortlist of new additions that might warrant a timely system upgrade.

Airflow has seen four minor version releases since September 2022, each introducing new features and functionality to the now well-established Airflow 2.x major release. From streamlining your pipelines using Setup and Teardown Tasks to empowering your internal reporting using the notification feature of Airflow, we want to introduce you to the potential that the collective intelligence of an open-source ecosystem can bring to your business. The following aspects are new additions or reworked facets of Apache Airflow features that have been released during the last year.

Task Queue Rework

Tasks getting stuck during processing can prove to be a significant annoyance when working with orchestration engines at scale. In the past, this could occasionally happen with Airflow, especially when using the Celery Executor. Tasks could get stuck in the “queued” status and only continue to be processed when the current instance of the task scheduler would be terminated and the orphan task was taken over by another task scheduler, possibly delaying the task execution for up to a few hours. A common workaround for that issue was the configuration setting “AIRFLOW__CELERY__STALLED_TASK_TIMEOUT”, which would resolve most but not all issues with stuck tasks.

In Update 2.6.0, the Airflow Community introduced a new setting called “scheduler.task_queued_timeout”, which handles these types of situations for the Celery and Kubernetes Executors. Airflow will now regularly query its internal database for tasks that have been queued for longer than the value set in this setting and send any of those identified stuck tasks to the executor to fail and reschedule the task.

With the introduction of this setting, some of the older workarounds have been deprecated, to streamline the configuration for those executors. The deprecated settings which were now effectively replaced are “kubernetes.worker_pods_pending_timeout”, “celery.stalled_task_timeout”, and “celery.task_adoption_timeout”. As a result, the Airflow task scheduling is more reliable than ever and the configuration of fail and retry behavior no longer requires experimental workarounds.

DAG Status Notifications

Monitoring the status of data pipelines is an important challenge for any data science or data engineering team. With version 2.6.0, the Airflow community has introduced the DAG status notification feature, which will help your team keep track of the current status of your data pipelines from a variety of different external systems. This new feature allows for sending a notification to external systems as soon as the status of a DAG run changes, for example once it has finished. Currently the only system that is integrated out of the box for notification support is Slack, but there already is a variety of additional system integrations available via Airflow extension modules developed by members of the community. These include, but are not limited to, components to enable sending notifications via email (SMTP) or Discord. Of course you can also develop a custom notifier for your specific system yourself, using the BaseNotifier abstract class provided by Airflow.

The following is an example of a DAG definition using the notification feature to send a Slack message on a successful execution:

with DAG(

“example_slack_notification”,

start_date=datetime(2023, 1, 1),

on_success_callback=[

send_slack_notification(

text="DAG has run successfully",

channel="#dag-status",

username="Airflow",

)

],

)

Effective workflow management with Apache Airflow 2.0

Setup and Teardown Tasks

Complex data workflows often require the creation and configuration of infrastructure resources before they can be executed. Those same resources also need to be cleaned up after the workflow has finished. Integrating those functionalities into your DAGs historically brought with it the risk of falsifying the pipeline execution result, for example when certain resources were not correctly removed after the logic part of the pipeline had run correctly. With update 2.7.0, the Apache Airflow feature of Setup and Teardown tasks was introduced, which now allows handling those steps in dedicated subtasks.

This brings multiple benefits, for one it allows for a more atomized approach to designing your data pipeline by separating the logic part of your tasks from their infrastructure requirements, which improves code readability and ease of maintenance. On the other hand, the nature of those newly introduced setup and teardown tasks can change when a task is considered to be failed. For example, by default a DAG run that fails during its teardown task, when the previous steps have all run without errors, will still be shown as a successful run. This behavior, while it can be overridden, can be used to prevent false alarms during the monitoring of your pipelines.

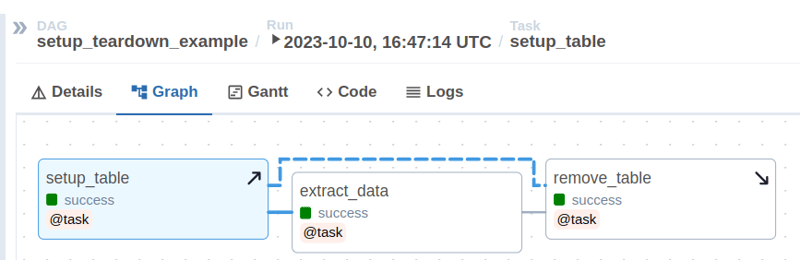

In the Airflow DAG graph UI, a setup task will be marked with an arrow pointing upward, a teardown task will be marked with a downward pointing arrow and the relation between setup and teardown task will be shown as a dotted line.

Airflow Datasets and OpenLineage

Airflow has often been criticized for not offering a data-aware approach to workflows, focusing entirely on the execution of tasks instead of the actual payload in data pipelines. The Airflow Dataset interface introduced with version 2.4 in September 2022 finally changed that. Tasks now can define data objects they consume or produce and entire DAGs can be scheduled based on dataset updates. Just-in-time scheduling without any sensor-like querying workaround is now possible as well as monitoring the timeliness of datasets in the Airflow UI. Check out our blog post introducing Airflow datasets from earlier this year for more information.

Keeping track of how information propagates through the complex network of data pipelines that feeds data warehouses and business intelligence platforms is a key aspect when managing and evaluating these systems and their performance. What downstream tasks will be affected when the data model on my source database table changes? A whole new ecosystem of tools and best practices has coined the terms Data Observability and Data Lineage. The Airflow Dataset interface was a stepping stone towards integration with third party tools for lineage tracking through the OpenLineage specification.

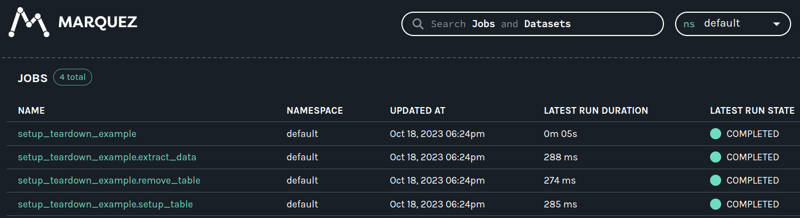

Airflow version 2.7 makes OpenLineage integration a native feature as of August 2023. Where extension modules had to be manually loaded and written into DAG definitions in the past, Airflow DAGs now automatically register with an OpenLineage backend service. This integration works especially well when tasks are defined with input and output datasets, allowing graph-like tracing of how data assets are processed and what their respective upstream and downstream dependencies are.

New Apache Airflow Features - Our Conclusion

While this article only highlights a handful of the most exciting new features of Apache Airflow, it has seen thousands of smaller changes in the past year, expanding functionality, improving performance, and strengthening the reliability of the orchestration platform. Keeping your system updated not only is a security measure but gives access to many new Apache Airflow features and improvements.

We have helped teams upgrade their Airflow systems and support them in keeping up with the latest changes. Reach out to us if you have any questions about how new features can strengthen your business processes or if you want to discuss your current architecture and potential roadmap.

Data Science & Engineering, Apache Airflow

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)