A year has passed since SAP unveiled its new product strategy for its data platform in February 2025. SAP Business Data Cloud also gave rise to SAP Databricks, the new hybrid product resulting from the collaboration between the two data companies. Since the announcement, we have been regularly discussing the question of what specifically distinguishes SAP Databricks from the original product, now mostly referred to as "Enterprise Databricks." Which system environment is suitable for a particular use case, which functions are included or not included in SAP Databricks, and are there strategic reasons for choosing one variant over the other?

We provide insight into the results of our research and give you all the information you need to make an informed decision. In today's first part of this overview, we focus on the functional scope of the two Databricks variants and how they differ in terms of technical system operation from the customer's perspective. In the second part, we look at zero-copy data sharing, operating costs, and strategic considerations for deciding between the variants.

What else is included in the SAP Business Data Cloud besides SAP Databricks? Find a comprehensive overview in our BDC Blog Series from last year.

Reduced by Design: which Databricks features are Included - and missing

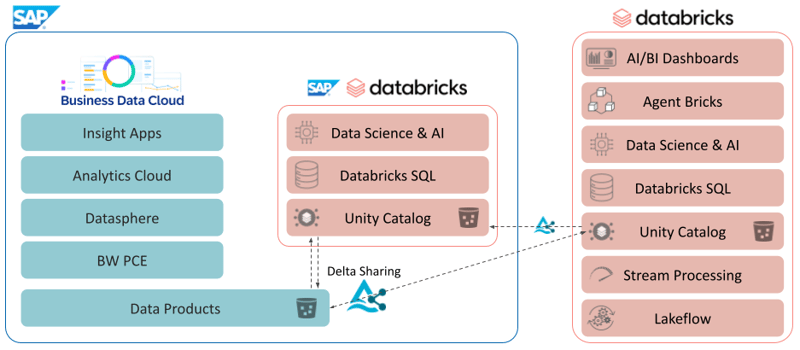

SAP Databricks is a Databricks Workspace with a reduced range of functions that is directly integrated into the SAP Business Data Cloud system landscape. Alongside Datasphere and the Analytics Cloud, SAP Databricks is thus a core component of BDC. Within a BDC instance, exactly one SAP Databricks Workspace is available and is provided in the cloud infrastructure of the hyperscaler selected for BDC, Amazon Web Services, Google Cloud Platform, or Microsoft Azure.

In terms of functionality, the SAP Databricks Workspace is limited to the core functions of Databricks, which have been known as important building blocks for many years: Databricks Notebooks as an interactive and collaborative development environment for Python and SQL. Scalable compute resources based on Apache Spark or the specially developed Databricks Photon Engine for SQL warehouses. The development of machine learning applications is supported by the MLflow framework and a corresponding user interface for monitoring, model versioning and evaluation, as well as the provision of models as a web service. Last but not least, all these functions are based on the Lakehouse storage model with Delta Tables as the storage format and are connected to the Unity Catalog as the central point of contact for access control and authorization management.

There are also some special features with regard to the connectivity and integration of data from BDC and third-party systems: SAP Databricks can use the Delta Sharing Protocol to share tables or views with the rest of the BDC environment , so that data can be used directly and without replication in the Analytics Cloud or Datasphere. Conversely, shared data from the BDC or third-party systems can be used in the Databricks workspace. However, delta shares cannot be shared with third-party systems from the SAP Databricks workspace. This efficient access protocol is restricted to the BDC environment.

With regard to incoming data integration, SAP Databricks basically supports any source, provided that the connection can be programmed with Python. On a smaller scale, this is certainly a good addition to native connectors from other BDC components such as Datasphere, but it is not ideal. In addition to the ability to read data from third-party systems via delta sharing, the SAP Databricks Workspace lacks low-code connectors for widely used business software and databases, as well as convenience functions developed by Databricks for reading near-live data streams (Databricks Autoloader). The Databricks Jobs Framework for process control and orchestration is available in SAP Databricks, but is also limited to basic time-based triggers and simple processes. Native Spark jobs, SQL scripts, or pipelines based on the dbt framework cannot be executed as usual.

Watch the recording of our Webinar:

"Bridging Business and Analytics: The Plug-and-Play Future of Data Platforms"

Finally, it should be mentioned that in the SAP Databricks Workspace, all compute resources can only be used in "serverless" mode. Users and administrators of the workspace therefore have no direct way to configure specific resources and scale them for specific tasks.

Enterprise Databricks comes without comparable restrictions and also offers a long list of additional functions and system components. These include, in particular, a dedicated, fully-fledged user interface for dashboarding and business intelligence, including LLM-supported interaction options in natural language. In the area of generative AI models and agentic systems, Databricks regularly integrates the necessary tools for developing and operating the latest innovations: Retrieval Augmented Generation (RAG) and Agentic AI can be programmed or composed using low-code input masks from preconfigured building blocks.

Data integration functions in Enterprise Databricks go significantly further than in SAP Databricks: Autoloaders for efficient loading of file content from storage services in near real time and low-code connectors for connecting common software products and databases are available. In addition, Databricks Job can be used to compile and orchestrate a wide variety of workflows. With Spark Declarative Pipelines (known as Delta Live Tables until 2025), completely virtual data models can be constructed and synchronized and precalculated in real time for maximum performance. The company's own application development framework and a marketplace for exchanging data and applications with other Databricks workspaces round out the offering.

As an interim conclusion, it can be said that SAP Databricks offers no advantages over Enterprise Databricks in terms of functionality. There are (currently) no exclusive features in the SAP Databricks Workspace for deeper integration with the SAP landscape, e.g., with regard to comprehensive access control.

Platform operation in Focus: SaaS vs. PaaS

SAP Databricks and Enterprise Databricks place very different demands on an organization in terms of operation. In short, SAP Databricks is software-as-a-service (SaaS), while Enterprise Databricks is platform-as-a-service PaaS). This statement is not entirely accurate, because SAP Databricks also offers a largely open platform that can be used in many different ways. However, the effort required to make the workspace available to users is reduced to a minimum. Compute resources are completely hidden behind the "serverless" label, and the connection to cloud storage is also handled entirely by SAP BDC Deployment. In short, SAP Databricks is made available in my SAP BDC system landscape at the touch of a button.

Organizations usually operate Enterprise Databricks themselves or with the support of a service provider in their own cloud tenant. For a quick POC, the necessary resources such as storage and network components can also be rolled out at the desired hyperscaler at the touch of a button. In productive operation, however, information security requirements always necessitate fully defined and traceable concepts and processes, which are best achieved with an infrastructure-as-code approach and the associated CI/CD pipelines. The entry barrier here is significantly higher than in the SAP Business Data Cloud package, which largely relieves the customer of such processes.

Interim conclusion: SAP Databricks vs. Enterprise Databricks

In the first part of our comparison of Enterprise Databricks and SAP Databricks, it is already clear that the decision between these variants is a trade-off between functionality and operational effort. SAP Databricks requires less expertise in the field of infrastructure DevOps, or at least promises significantly less configuration effort for customers and their teams. On the other hand, some exciting technical features of the Databricks platform are missing. In particular, the fact that many of the low-code components for connecting to third-party systems are not available in an SAP Databricks workspace contradicts, in a sense, the SAP system landscape's claim to make technology accessible to as wide an audience as possible.

In the second part of our system comparison, we take a closer look at what is known as "zero-copy data sharing" and the differences that result from it. We will also take a look at the cost structure and highlight other strategic aspects that are important for making a decision.

If already today you want to assess which Databricks variant best fits your system landscape, use cases and operational model, feel free to get in touch with us. We support you in evaluating, analyzing, and making informed decisions - from the initial assessment to a concrete architecture plan.

FAQ - Enterprise Databricks vs. SAP Databricks

These are some of the most frequently asked questions about Enterprise Databricks vs. SAP Databricks: Everything you need to know.

No. Currently, SAP Databricks does not provide exclusive functionality compared to Enterprise Databricks.

Data Science & Engineering, Databricks

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)