With SAP Databricks now firmly established as part of the SAP Business Data Cloud, many organizations are moving beyond feature checklists and asking more strategic questions: How does data sharing work across system boundaries? What does operating Databricks actually cost in practice? And how do these factors influence long-term architectural decisions?

In the first part of our comparison, we examined the functional scope of SAP Databricks and Enterprise Databricks and discussed how the two variants differ in terms of platform operation and technical flexibility. In this second part, we shift the focus to the underlying data architecture and economics. We take a closer look at zero-copy data sharing via Delta Sharing, compare the respective cost models, and outline strategic considerations that play a key role when choosing between SAP Databricks and Enterprise Databricks.

Zero-copy data sharing

The major technological innovation in SAP Business Data Cloud is undoubtedly the deep integration of data lake storage into the platform. In this type of data lakehouse architecture, storage and compute resources are decoupled, allowing the system to scale dynamically and cost-effectively. Compared to a traditional in-memory database, this type of system does not require large amounts of RAM on a permanent basis. Zero-copy data sharing is a consequence of this architecture: to access data in storage, it is not always necessary to use the same compute resource. It is possible to allow different query engines to access the same data without replicating it. In particular, this means that compatible third-party systems can also access the data, provided that authentication and authorization are technically negotiated.

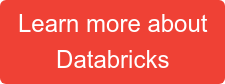

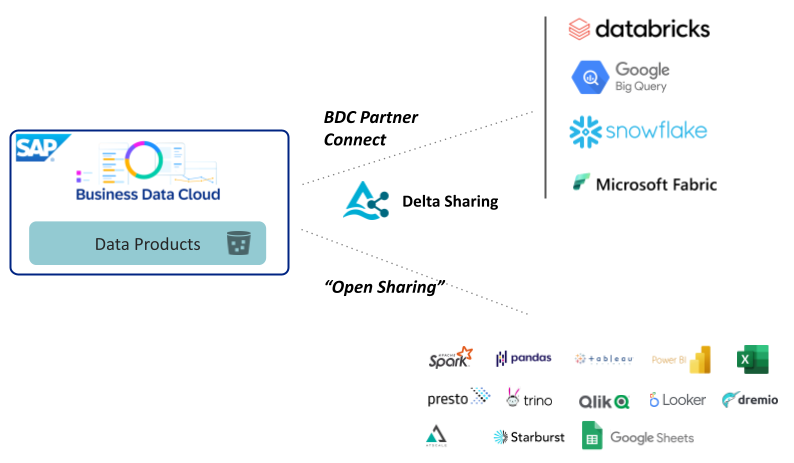

The Delta Sharing Protocol, which was developed primarily by Databricks, handles this technical negotiation of access permissions. SAP Business Data Cloud supports the protocol, enabling not only mutual access to data objects between Datasphere and SAP Databricks within the platform, but also data exchange with third-party systems.

SAP calls the exchange of data via Delta Sharing with third-party systems "BDC Connect." Enterprise Databricks, Google BigQuery, Snowflake, and Microsoft Fabric have already been announced by SAP as compatible third-party systems.

SAP Databricks differs from Enterprise Databricks in terms of Delta Sharing functionality: while the full-featured Databricks platform is already compatible with many third-party systems and allows bidirectional data exchange depending on the availability of the corresponding connectors on the other side, SAP Databricks limits connectivity to incoming data shares. This means that an SAP Databricks workspace can read data from a delta share from any data source that is technically compatible with the protocol. However, outgoing shares can only be created from SAP Databricks to the SAP BDC environment.

Zero-copy data sharing generally promises an exciting future in which large, distributed enterprise platforms require less effort to synchronize data across different subsystems. However, a key aspect in such landscapes is always the question of uniform authorization management - and this is not addressed by the Delta Sharing protocol.

Watch the recording of our Webinar:

"Bridging Business and Analytics: The Plug-and-Play Future of Data Platforms"

A share using Delta Sharing can be simplified as read access to all data in a table. Extended access rights based on additional attributes, e.g., row-level security or column masking, role-based access control depending on whether a user has been assigned a specific attribute or role, cannot be transmitted to the receiving system using Delta Sharing. Such access concepts are required when a data landscape reaches a certain level of complexity and size and must currently be explicitly implemented in the various systems that access a common data set.

This restriction applies explicitly to data sharing between SAP BDC and SAP Databricks as well as to BDC and Enterprise Databricks.

Cost comparison

When comparing two tools, there is no way around considering the expected costs. Databricks generally follows a "pay per use" cost model, so that only resources that are actually used are billed dynamically. In addition, there are costs for the infrastructure used on the respective hyperscaler.

How are the costs for Enterprise Databricks calculated?

Enterprise Databricks charges for resources used in so-called "Databricks Units" (DBUs), which are ultimately billed as a kind of on-demand license fee. As a rule of thumb, you can assume costs of EUR 1 per DBU per hour, which already includes the operating costs for the associated virtual machines, which are billed by the hyperscaler in addition to the DBU list prices. In Databricks itself, as well as in the hyperscaler's cost monitoring, it is possible to track very granularly which resources incurred which costs in which period. This extensive transparency enables targeted optimization, particularly of automated processes, and efficient scaling of compute clusters.

The hyperscaler charges for the storage used and network load. The following applies here: storage costs scale approximately linearly and there are options for volume discounts, while caution is advised with regard to ingress and egress costs. Moving very large amounts of data across the cloud provider's system boundaries in a short period of time can incur additional costs.

How does SAP charge for SAP Databricks?

The cost accounting for SAP Databricks is less transparent, or at least an order of magnitude more abstract. Payment is made for SAP "Capacity Units" (CU), which are purchased in advance as credit for the entire BDC environment and then deducted from the available CU quota depending on usage. Both the DBU costs and the infrastructure operating costs are included in the CU incurred for SAP Databricks. In the SAP CU capacity calculator, different quotas for storage, compute, and network load must therefore be estimated in advance for the operation of SAP Databricks. Billing thus appears simpler, but at the same time allows for less concrete optimization. At the end of the day, as is always the case with SAP products, the actual costs are determined by the end customer negotiating an individual price with SAP, and full transparency is not guaranteed.

A concrete cost forecast for the use of Databricks of any kind is always an individual calculation based on specific (estimated) operating parameters for a specific purpose. If Databricks is used regularly by multiple users for productive purposes, Enterprise Databricks is likely to be more cost-effective due to its more refined configuration and control mechanisms and direct billing from hyperscalers and Databricks without SAP as a middleman.

Enterprise Databricks vs. SAP Databricks: Our Conclusion and Outlook

We have compared SAP Databricks and Enterprise Databricks in terms of their range of functions, the different operating effort, and the cost structure. In addition, we have presented our findings on the possibilities and limitations of zero-copy data sharing in the two system variants. The conclusion remains: SAP Databricks is a significantly limited light version of Enterprise Databricks with simplified operating and billing modes for SAP customers.

SAP Databricks has the potential to develop actual functional focal points in the coming years that will make its use in an integrated SAP Business Data Cloud more attractive. A fully integrated authorization framework is an obvious choice, standardizing complex role- and attribute-based access rules at the row level across Datasphere, SAC, and SAP Databricks without additional programming effort. Genuine, consistently standardized data governance across all system components in an integrated enterprise data catalog is also an opportunity for SAP.

At the beginning of 2026, however, we see SAP Databricks primarily as a convenient development environment for certain niches: the development of individual machine learning applications on data that is already fully available in the SAP world; complex data pipelines that are difficult or inefficient to implement with Datasphere's on-board tools; complex evaluations of data that, for organizational reasons, cannot be processed outside the SAP system landscape.

The discussion shows that the choice between SAP Databricks and Enterprise Databricks is not a purely technical one, but a strategic platform decision that affects architecture, operating model, and long-term scalability. To support decision-makers who need a concise, high-level overview, we have summarized the key findings in a One-Page Executive Comparison below. It distills the most important differences and trade-offs, enabling a quick assessment of which Databricks variant best aligns with your organizational priorities and strategic goals.

Executive Comparison Matrix |

||

| Category | Enterprise Databricks | SAP Databricks |

| Strategic role | Enterprise-wide data & AI platform | SAP-centric lakehouse extension |

| Functional depth | Very high (full Databricks stack) | Limited (core features only) |

| Operational effort | High (DevOps, IaC, CI/CD required) | Very low (fully managed by SAP) |

| Flexibility & extensibility | Excellent | Limited by design |

| Data integration | Broad (low-code + streaming + batch) | Basic (mainly Python-based) |

| Zero-copy data sharing | Full, bidirectional | Incoming only, outgoing limited to BDC |

| Cost transparency | High (DBUs + hyperscaler costs) | Low (SAP Capacity Units) |

| Cost efficiency at scale | Strong | Often weaker at scale |

| SAP ecosystem fit | Neutral | Native and seamless |

| Ideal use case | Strategic data platform, AI at scale | Entry-level lakehouse for SAP BDC |

Do you want to assess which Databricks variant best fits your system landscape, use cases and operational model, feel free to get in touch with us. We support you in evaluating, analyzing, and making informed decisions - from the initial assessment to a concrete architecture plan.

FAQ - Enterprise Databricks vs. SAP Databricks

These are some of the most frequently asked questions about Enterprise Databricks vs. SAP Databricks: Strategic Decision Making.

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)